Arısoy Saraçlar, Ebru

Loading...

Profile URL

Name Variants

Arısoy, Ebru

Job Title

Email Address

saraclare@mef.edu.tr

Main Affiliation

02.05. Department of Electrical and Electronics Engineering

Status

Current Staff

Website

ORCID ID

Scopus Author ID

Turkish CoHE Profile ID

Google Scholar ID

WoS Researcher ID

Sustainable Development Goals

2

ZERO HUNGER

0

Research Products

16

PEACE, JUSTICE AND STRONG INSTITUTIONS

0

Research Products

1

NO POVERTY

0

Research Products

11

SUSTAINABLE CITIES AND COMMUNITIES

0

Research Products

7

AFFORDABLE AND CLEAN ENERGY

0

Research Products

10

REDUCED INEQUALITIES

0

Research Products

3

GOOD HEALTH AND WELL-BEING

0

Research Products

6

CLEAN WATER AND SANITATION

0

Research Products

9

INDUSTRY, INNOVATION AND INFRASTRUCTURE

0

Research Products

12

RESPONSIBLE CONSUMPTION AND PRODUCTION

0

Research Products

5

GENDER EQUALITY

0

Research Products

14

LIFE BELOW WATER

0

Research Products

13

CLIMATE ACTION

0

Research Products

15

LIFE ON LAND

0

Research Products

8

DECENT WORK AND ECONOMIC GROWTH

0

Research Products

17

PARTNERSHIPS FOR THE GOALS

0

Research Products

4

QUALITY EDUCATION

1

Research Products

Documents

42

Citations

1380

h-index

14

Documents

29

Citations

633

Scholarly Output

19

Articles

0

Views / Downloads

3765/2351

Supervised MSc Theses

3

Supervised PhD Theses

0

WoS Citation Count

83

Scopus Citation Count

47

WoS h-index

3

Scopus h-index

5

Patents

0

Projects

3

WoS Citations per Publication

4.37

Scopus Citations per Publication

2.47

Open Access Source

4

Supervised Theses

3

| Journal | Count |

|---|---|

| 2020 28th Signal Processing and Communications Applications Conference (SIU) | 3 |

| Turkish Natural Language Processing | 2 |

| 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation, LREC-COLING 2024 - Main Conference Proceedings -- Joint 30th International Conference on Computational Linguistics and 14th International Conference on Language Resources and Evaluation, LREC-COLING 2024 -- 20 May 2024 through 25 May 2024 -- Hybrid, Torino -- 199620 | 1 |

| Conference: 16th Annual Conference of the International-Speech-Communication-Association (INTERSPEECH 2015) Location: Dresden, GERMANY Date: SEP 06-10, 2015 | 1 |

| Conference: 17th Annual Conference of the International-Speech-Communication-Association (INTERSPEECH 2016) Location: San Francisco, CA Date: SEP 08-12, 2016 | 1 |

Current Page: 1 / 3

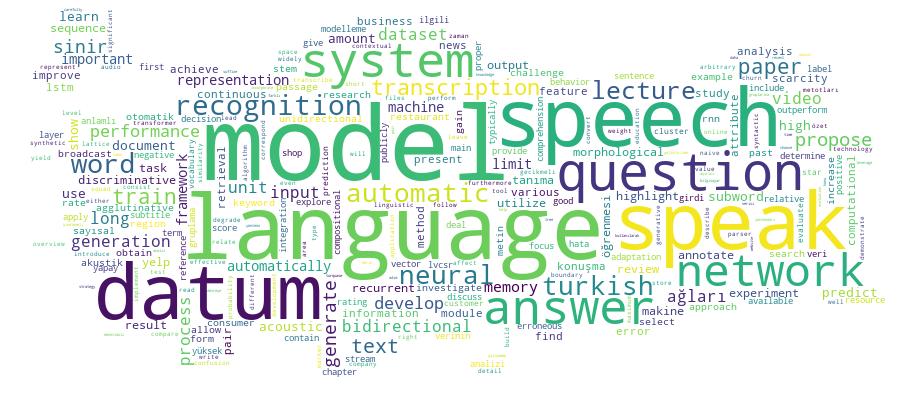

Competency Cloud