Aydın, Yusuf

Loading...

Profile URL

Name Variants

Aydın, Yusuf

Aydin, Yusuf

Aydin, Yusuf

Job Title

Email Address

aydiny@mef.edu.tr

Main Affiliation

02.05. Department of Electrical and Electronics Engineering

Status

Current Staff

Website

ORCID ID

Scopus Author ID

Turkish CoHE Profile ID

Google Scholar ID

WoS Researcher ID

Sustainable Development Goals

2

ZERO HUNGER

0

Research Products

16

PEACE, JUSTICE AND STRONG INSTITUTIONS

0

Research Products

1

NO POVERTY

0

Research Products

11

SUSTAINABLE CITIES AND COMMUNITIES

0

Research Products

7

AFFORDABLE AND CLEAN ENERGY

0

Research Products

10

REDUCED INEQUALITIES

0

Research Products

3

GOOD HEALTH AND WELL-BEING

0

Research Products

6

CLEAN WATER AND SANITATION

0

Research Products

9

INDUSTRY, INNOVATION AND INFRASTRUCTURE

3

Research Products

12

RESPONSIBLE CONSUMPTION AND PRODUCTION

0

Research Products

5

GENDER EQUALITY

0

Research Products

14

LIFE BELOW WATER

0

Research Products

13

CLIMATE ACTION

0

Research Products

15

LIFE ON LAND

0

Research Products

8

DECENT WORK AND ECONOMIC GROWTH

0

Research Products

17

PARTNERSHIPS FOR THE GOALS

0

Research Products

4

QUALITY EDUCATION

0

Research Products

Documents

15

Citations

307

h-index

11

Documents

12

Citations

251

Scholarly Output

8

Articles

3

Views / Downloads

2007/6910

Supervised MSc Theses

0

Supervised PhD Theses

0

WoS Citation Count

94

Scopus Citation Count

107

WoS h-index

4

Scopus h-index

4

Patents

0

Projects

1

WoS Citations per Publication

11.75

Scopus Citations per Publication

13.38

Open Access Source

2

Supervised Theses

0

| Journal | Count |

|---|---|

| 2023 30th IEEE International Conference on Electronics, Circuits and Systems (ICECS) | 2 |

| 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) | 1 |

| 18th International Conference on Human Robot Interaction-HRI -- MAR 13-16, 2023 -- Stockholm, SWEDEN | 1 |

| IEEE 20th International Conference on Automation Science and Engineering (CASE) -- AUG 28-SEP 01, 2024 -- Bari, ITALY | 1 |

| IEEE Transactions on Haptics | 1 |

Current Page: 1 / 2

Scopus Quartile Distribution

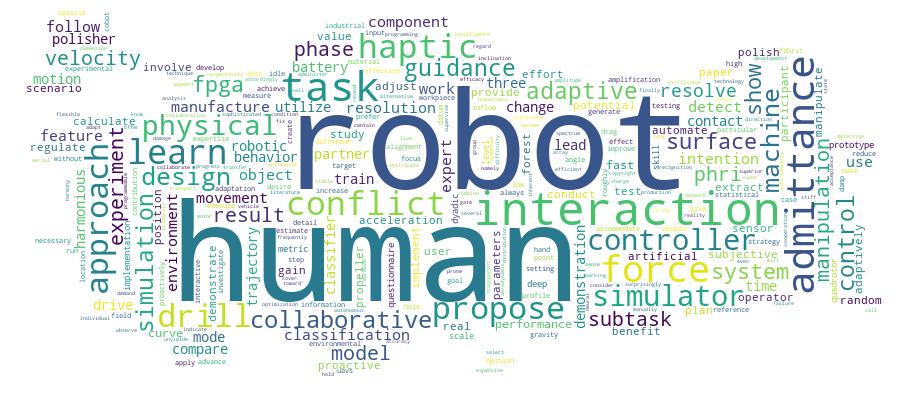

Competency Cloud

8 results

Scholarly Output Search Results

Now showing 1 - 8 of 8

Conference Object Citation - Scopus: 1Design and Fpga Implementation of Uav Simulator for Fast Prototyping(IEEE, 2023) Aydın, Yusuf; Ayhan, Tuba; Akyavaş , İrfanAs production and advances in motor and battery cell technology progress, unmanned aerial vehicles (UAVs) are gaining more and more acceptance and popularity. Unfortunately, the design and prototyping of UAVs is an expensive and long process. This paper proposes a fast, component based simulation environment for UAVs so that they can be roughly tested without a damage risk. Moreover, the combined effect of individual component choices can be observed with the simulator to reduce design time. The simulator is flexible in the sense that detailed aerodynamic effects and selected components models can be included. In this work, the simulator is proposed, model parameters are extracted for a particular UAV for testing the simulator and it is implemented on an field programmable gate array (FPGA) to increase simulation speed. The simulator calculates battery state of charge (SOC), position, velocity and acceleration of the UAV with gravity, drag, propeller air inflow velocity. The simulator runs on the FPGA fabric of AMD-XCKU13P with simulation steps of 1 ms.Conference Object Citation - WoS: 11Citation - Scopus: 11Resolving Conflicts During Human-Robot Co-Manipulation(IEEE Computer Society, 2023) Al-Saadi, Zaid; Hamad, Yahya M.; Aydin, Yusuf; Kucukyilmaz, Ayse; Basdogan, CagatayThis paper proposes a machine learning ( ML) approach to detect and resolve motion conflicts that occur between a human and a proactive robot during the execution of a physically collaborative task. We train a random forest classifier to distinguish between harmonious and conflicting human-robot interaction behaviors during object co-manipulation. Kinesthetic information generated through the teamwork is used to describe the interactive quality of collaboration. As such, we demonstrate that features derived from haptic (force/torque) data are sufficient to classify if the human and the robot harmoniously manipulate the object or they face a conflict. A conflict resolution strategy is implemented to get the robotic partner to proactively contribute to the task via online trajectory planning whenever interactive motion patterns are harmonious, and to follow the human lead when a conflict is detected. An admittance controller regulates the physical interaction between the human and the robot during the task. This enables the robot to follow the human passively when there is a conflict. An artificial potential field is used to proactively control the robot motion when partners work in harmony. An experimental study is designed to create scenarios involving harmonious and conflicting interactions during collaborative manipulation of an object, and to create a dataset to train and test the random forest classifier. The results of the study show that ML can successfully detect conflicts and the proposed conflict resolution mechanism reduces human force and effort significantly compared to the case of a passive robot that always follows the human partner and a proactive robot that cannot resolve conflicts.Article Citation - WoS: 21Citation - Scopus: 24An Adaptive Admittance Controller for Collaborative Drilling With a Robot Based on Subtask Classification Via Deep Learning(Elsevier, 2022) Başdoğan, Çağatay; Niaz, P. Pouya; Aydın, Yusuf; Güler, Berk; Madani, AlirezaIn this paper, we propose a supervised learning approach based on an Artificial Neural Network (ANN) model for real-time classification of subtasks in a physical human–robot interaction (pHRI) task involving contact with a stiff environment. In this regard, we consider three subtasks for a given pHRI task: Idle, Driving, and Contact. Based on this classification, the parameters of an admittance controller that regulates the interaction between human and robot are adjusted adaptively in real time to make the robot more transparent to the operator (i.e. less resistant) during the Driving phase and more stable during the Contact phase. The Idle phase is primarily used to detect the initiation of task. Experimental results have shown that the ANN model can learn to detect the subtasks under different admittance controller conditions with an accuracy of 98% for 12 participants. Finally, we show that the admittance adaptation based on the proposed subtask classifier leads to 20% lower human effort (i.e. higher transparency) in the Driving phase and 25% lower oscillation amplitude (i.e. higher stability) during drilling in the Contact phase compared to an admittance controller with fixed parameters.Conference Object Citation - WoS: 17Citation - Scopus: 18Robot-Assisted Drilling on Curved Surfaces With Haptic Guidance Under Adaptive Admittance Control(IEEE, 2022) Başdoğan, Çağatay; Niaz, Pouya P.; Aydın, Yusuf; Güler, Berk; Madani, AlirezaDrilling a hole on a curved surface with a desired angle is prone to failure when done manually, due to the difficulties in drill alignment and also inherent instabilities of the task, potentially causing injury and fatigue to the workers. On the other hand, it can be impractical to fully automate such a task in real manufacturing environments because the parts arriving at an assembly line can have various complex shapes where drill point locations are not easily accessible, making automated path planning difficult. In this work, an adaptive admittance controller with 6 degrees of freedom is developed and deployed on a KUKA LBR iiwa 7 cobot such that the operator is able to manipulate a drill mounted on the robot with one hand comfortably and open holes on a curved surface with haptic guidance of the cobot and visual guidance provided through an AR interface. Real-time adaptation of the admittance damping provides more transparency when driving the robot in free space while ensuring stability during drilling. After the user brings the drill sufficiently close to the drill target and roughly aligns to the desired drilling angle, the haptic guidance module fine tunes the alignment first and then constrains the user movement to the drilling axis only, after which the operator simply pushes the drill into the workpiece with minimal effort. Two sets of experiments were conducted to investigate the potential benefits of the haptic guidance module quantitatively (Experiment I) and also the practical value of the proposed pHRI system for real manufacturing settings based on the subjective opinion of the participants (Experiment II). The results of Experiment I, conducted with 3 naive participants, show that the haptic guidance improves task completion time by 26% while decreasing human effort by 16% and muscle activation levels by 27% compared to no haptic guidance condition. The results of Experiment II, conducted with 3 experienced industrial workers, show that the proposed system is perceived to be easy to use, safe, and helpful in carrying out the drilling task.Article Citation - WoS: 3Citation - Scopus: 4A Machine Learning Approach To Resolving Conflicts in Physical Human-Robot Interaction(Association for Computing Machinery, 2025) Ulas Dincer, Enes; Al-Saadi, Zaid; Hamad, Y.M.; Aydın, Yusuf; Kucukyilmaz, A.; Basdogan, C.As artificial intelligence techniques become more sophisticated, we anticipate that robots collaborating with humans will develop their own intentions, leading to potential conflicts in interaction. This development calls for advanced conflict resolution strategies in physical human-robot interaction (pHRI), a key focus of our research. We use a machine learning (ML) classifier to detect conflicts during co-manipulation tasks to adapt the robot's behavior accordingly using an admittance controller. In our approach, we focus on two groups of interactions, namely "harmonious"and "conflicting,"corresponding respectively to the cases of the human and the robot working in harmony to transport an object when they aim for the same target, and human and robot are in conflict when human changes the manipulation plan, e.g. due to a change in the direction of movement or parking location of the object.Co-manipulation scenarios were designed to investigate the efficacy of the proposed ML approach, involving 20 participants. Task performance achieved by the ML approach was compared against three alternative approaches: (a) a rule-based (RB) Approach, where interaction behaviors were rule-derived from statistical distributions of haptic features; (b) an unyielding robot that is proactive during harmonious interactions but does not resolve conflicts otherwise, and (c) a passive robot which always follows the human partner. This mode of cooperation is known as "hand guidance"in pHRI literature and is frequently used in industrial settings for so-called "teaching"a trajectory to a collaborative robot.The results show that the proposed ML approach is superior to the others in task performance. However, a detailed questionnaire administered after the experiments, which contains several metrics, covering a spectrum of dimensions to measure the subjective opinion of the participants, reveals that the most preferred mode of interaction with the robot is surprisingly passive. This preference indicates a strong inclination toward an interaction mode that gives more control to humans and offers less demanding interaction, even if it is not the most efficient in task performance. Hence, there is a clear trade-off between task performance and the preferred mode of interaction of humans with a robot, and a well-balanced approach is necessary for designing effective pHRI systems in the future. © 2025 Copyright held by the owner/author(s).Article Citation - WoS: 42Citation - Scopus: 49Adaptive Human Force Scaling Via Admittance Control for Physical Human-Robot Interaction(IEEE, 2021) Başdoğan, Çağatay; Aydın, Yusuf; Hamad, Yahya M.The goal of this article is to design an admittance controller for a robot to adaptively change its contribution to a collaborative manipulation task executed with a human partner to improve the task performance. This has been achieved by adaptive scaling of human force based on her/his movement intention while paying attention to the requirements of different task phases. In our approach, movement intentions of human are estimated from measured human force and velocity of manipulated object, and converted to a quantitative value using a fuzzy logic scheme. This value is then utilized as a variable gain in an admittance controller to adaptively adjust the contribution of robot to the task without changing the admittance time constant. We demonstrate the benefits of the proposed approach by a pHRI experiment utilizing Fitts’ reaching movement task. The results of the experiment show that there is a) an optimum admittance time constant maximizing the human force amplification and b) a desirable admittance gain profile which leads to a more effective co-manipulation in terms of overall task performance.Conference Object Live Demo: Design and Fpga Implementation of a Component Level Uav Simulator(IEEE, 2023) Aydın, Yusuf; Ayhan, Tuba; Akyavaş , İrfanIn this work, we introduce a fast, component based simulation environment for UAVs. The simulator framework is proposed as combination of three sub-models: i. battery, ii. BLDC and propeller, iii. dynamic model. The model parameters are extracted for a particular UAV for testing the simulator. The simulator is implemented on an FPGA to increase simulation speed. The simulator calculates battery SOC, position, velocity and acceleration of the UAV with gravity, drag, propeller air inflow velocity. The simulator runs on the FPGA fabric of XilinxXCKU13P with simulation steps of 1 ms.Conference Object Robotic Learning of Haptic Skills From Expert Demonstration for Contact-Rich Manufacturing Tasks(IEEE, 2024) Hamdan, Sara; Aydın, Yusuf; Oztop, Erhan; Basdogan, CagatayWe propose a learning from demonstration (LfD) approach that utilizes an interaction (admittance) controller and two force sensors for the robot to learn the force applied by an expert from demonstrations in contact-rich tasks such as robotic polishing. Our goal is to equip the robot with the haptic expertise of an expert by using a machine learning (ML) approach while providing the flexibility for the user to intervene in the task at any point when necessary by using an interaction controller. The utilization of two force sensors, a pivotal concept in this study, allows us to gather environmental data crucial for effectively training our system to accommodate workpieces with diverse material and surface properties and maintain the contact of polisher with their surfaces. In the demonstration phase of our approach where an expert guiding the robot to perform a polishing task, we record the force applied by the human (Fh) and the interaction force (Fint) via two separate force sensors for the polishing trajectory followed by the expert to extract information about the environment (Fenv = Fh - Fint). An admittance controller, which takes the interaction force as the input is used to output a reference velocity to be tracked by the internal motion controller (PID) of the robot to regulate the interactions between the polisher and the surface of a workpiece. A multilayer perceptron (MLP) model was trained to learn the human force profile based on the inputs of Cartesian position and velocity of the polisher, environmental force (Fenv), and friction coefficient between the polisher and the surface to the model. During the deployment phase, in which the robot executes the task autonomously, the human force estimated by our system ( <^>Fh) is utilized to balance the reaction forces coming from the environment and calculate the force ( <^>Fh - Fenv) needs to be inputted to the admittance controller to generate a reference velocity trajectory for the robot to follow. We designed three use-case scenarios to demonstrate the benefits of the proposed system. The presented use-cases highlight the capability of the proposed pHRI system to learn from human expertise and adjust its force based on material and surface variations during automated operations, while still accommodating manual interventions as needed.